Introducing the link analysis blog series, about the KB web archive, upcoming blogposts and links to published blogposts.

Last year, as a proof-of-concept for the Netwerk Digitaal Erfgoed (NDE), I performed a link analysis with the web collection from the KB – the National Library of The Netherlands. The goal of this analysis was to ascertain to which social media were linked from the archived websites in our collection. We also wanted to get a historical overview, so we selected four years (2010, 2013, 2016 and 2019) and analysed for each year the harvests of the month September.

Because our archive does not have handy analysing tools on top of its data, we had to begin from scratch: extracting, pre-processing and analysing raw data step by step. This series of blogposts will describe my practical experiences and challenges during this process. It will also describe how choices in settings influenced the visualisations and show some preliminary results. During this whole process there were two main topics: What are the problems when preforming a link analysis on a web collection and what data is not or barely visible in the results and why is this so?

About the KB web collection

The KB has a selective web archiving policy. We harvest a limited number of sites, which form our web collection, because this is more in line with the remit of the KB, the available resources and the chosen legal approach. This means that more attention can be paid to technical details and websites can be archived down to the deepest level. The selection is based on the KB's collection policy. Within this framework, a cross section of the Dutch domain is selected for archiving. Primarily, we select websites with cultural and academic content, but we do include websites of an innovative character which exemplify present trends on the Dutch web domain. Finally, we take into account relevance for Dutch society and popularity on the web.

To archive the Dutch web the KB primarily uses (and co-develops) the Web Curator Tool (WCT) in combination with webcrawler Heritrix developed by the Internet Archive. The WCT is a tool for managing selective web harvesting for non-technical users. The underlying database is a Postgresql database. In these blogposts I will not go into detail about how our web archive works, but if you like to know more please read ‘Historical growth of the KB web archive’. Hopefully this will tell you a bit about our archive.

For more information about the KB web collection visit their page on the KB website (Dutch). You can also find more information about the selection, technical issues and legal issues on their FAQ page (Dutch).

About me and we

My name is Iris Geldermans and I am currently working as junior researcher web archiving at the KB. I have been doing so for about one and a half years. Before this I also worked on quality assurance within the KB web collection. I hold a bachelor in History with a minor in Digital Humanities and a master in Public History (history in public spaces).

When I’m writing about ‘we’, I usually mean the amazing web archiving team (from collection specialists to programmers) at the KB without whom these blogposts would not have been possible. Working with and learning from a multidisciplinary team like ours has been and still is invaluable!

Let’s start blogging

So with some general information now out of the way, I would like to dig into the subjects of these blogposts. The blogposts are split into two subsections. On the one hand I would like to provide some practical workflow descriptions:

- How did we select and extract (meta)data

- How did I made this data suitable for network visualization (pre-processing work)

- How did I visualise the data in Gephi

The second part deals with major lessons learned:

- Dealing with the consequences of my pre-processing choices

- How to visualize an incomplete archived web collection

- Reviewing the results

- Do’s and don’ts for researching an archived web collection

Note: this is still work in progress! Subjects may change.

Below I will provide a short introduction about what each blogpost is roughly about and links to the individual posts.

Published posts:

Workflow descriptions:

About the reason for starting with this research, formulating a research question, selecting harvests and getting some data. Also containing information about extracting data and metadata from WARC files and WCT.

Pre-processing large datasets with Excel, HeidiSQL and MariaDB.

About my experiences with Gephi: the steps I took, what worked and what did not work.

Lessons learnt and results:

4. Wait…. Where did Hyves go?!

My pre-processing approach shows some flaws. Also: can I compare websites with Social Media platforms?

Upcoming post:

Lessons learnt and results:

5. Two years later.....

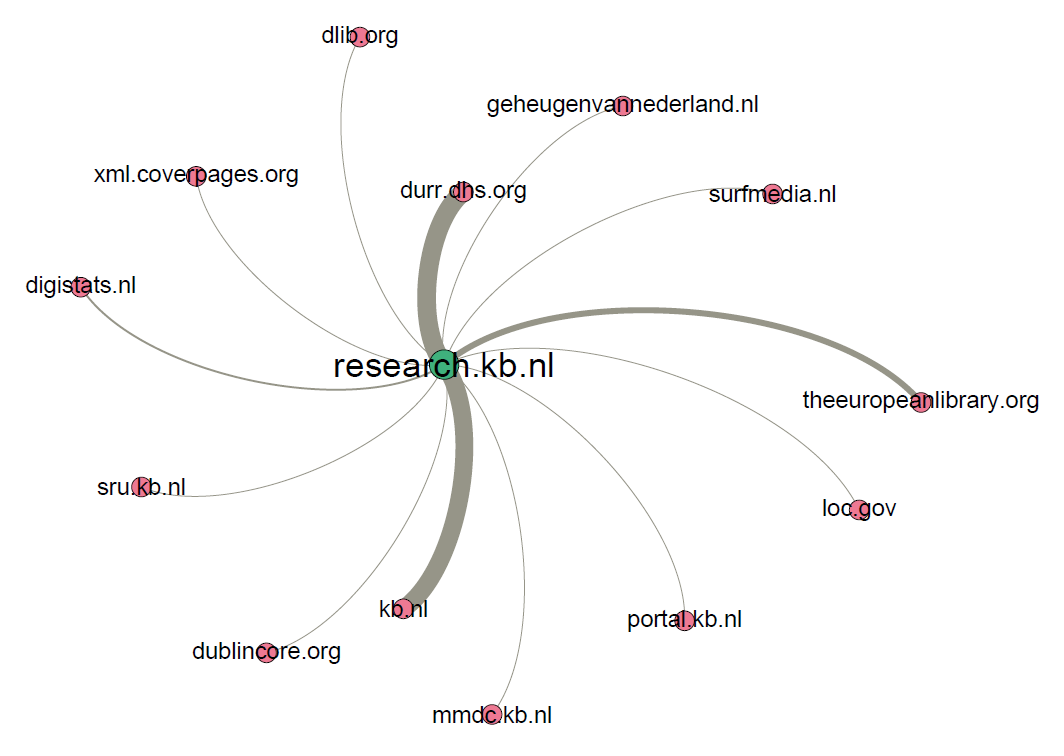

Figure 1. Small link visualisation of an old (and now offline) KB website: research.kb.nl. Harvested in September 2016.

Related

Next: Let's get some data - Link analysis part 1

Glossary

Anchor link – for our research these are hyperlinks with a href tag (<a>, <link>, <area>)

Embedded link – for our research these are hyperlinks without href tag (<img>, <script>, <embed>, <source>, <frame>, <iframe> and <track>)

HeidiSQL - lets you see and edit data and structures from computers running one of the database systems MariaDB, MySQL, Microsoft SQL, PostgreSQL and SQLite. For our research we use MariaDB.

Gephi - a visualization and exploration tool for all kinds of graphs and networks. Gephi is open-source and free.

MariaDB - a relational database made by the original developers of MySQL, open source.

Target Record (or Target) – Contains overarching settings such as schedule frequency (when and how often a website is harvested) and basic metadata like a subjectcode or a collection label.

Target Instance - is created each time a Target Record instructs Heritrix to harvest a website. Contains information about the result of the harvest and the log files.

WARC – file type in which archived websites are stored. The KB archive consists of WARC and its predecessor ARC files. This project was done for both types of files.

WCT – Web Curator Tool is a tool for managing selective web harvesting for non-technical users.

Web collection – For the KB: they have a selective web archiving policy were they harvest a limited number of sites. You can find an overview of all archived websites at the KB on their FAQ page [Dutch].