About the reason for starting with this research, formulating a research question, selecting harvests and getting some data. Also containing information about extracting data and metadata from WARC files and WCT.

Finding a suitable starting point

In 2020 my colleagues Sophie Ham and Kees Teszelszky presented their proposal for a link analysis to the Netwerk Digitaal Erfgoed (Network Digital Heritage). The goal was to extract hyperlinks that referred to social media from parts of the KB web collection. They wanted to gain insight into which type of social media was present in the collection so they would get a clear idea what was not being harvested (as the KB presently does not harvest social media). Perhaps even identify what should be harvested in the future. A second goal was to become familiar with the technique of a link analysis as an aid for the web archiving process and as tool for researching it.

Their original idea was to start with analysing the presence of social media within the XS4ALL collection. However this turned out to be difficult because the collection tags are not found within the WARC files. They are found in the Web Curator Tool (WCT) database in an (varchar) annotation field or the (also varchar) subject field. To connect WARCs with WCT turned out to be possible, but only further on in the project. When we started we decided on a simpler approach: analysing the historical growth of social media within the KB web collection narrowed down to specific years and months.

For this purpose we chose the month September in the years 2010, 2013, 2016 and 2019 and extracted hyperlinks from websites harvested in those months. We chose September because this month has no holidays, is an active period (start of the academic year) but not the most active month. For example: we harvest a lot of websites in December because of several holidays. This is a busy month with lots of special collections harvests. September, on the contrary, is more balanced. We picked 2010 as a starting point because we felt this was the year the KB web archiving activities ended its starting phase. The KB started archiving in 2007 and we felt that in 2010 the archive was solid enough to get a link analysis we could compare with other years. Because this project started in 2020 it made sense to end the analysis in 2019 as sometimes websites can be harvested for several days or even weeks.

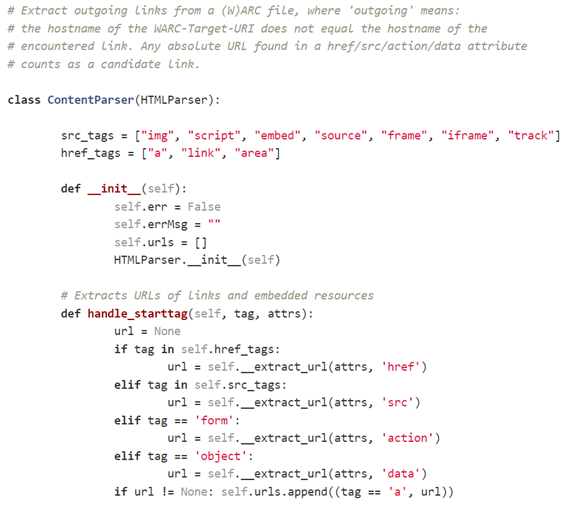

After we adjusted our research question and made our selection, it was time to determine what kind of data we needed to perform a link analysis. Of course we needed the page on which the URL was found and the URL found. For the raw data extracting phase these would be whole, not edited, hyperlinks. For the URL found we also wanted to know whether it was an anchor or embedded link. Anchor links would be (for our research) hyperlinks with a href tag (<a>, <link>, <area>) and embedded links would be hyperlinks without href tag (<img>, <script>, <embed>, <source>, <frame>, <iframe> and <track>). A timestamp would also be added (more on this later). This information would be compiled in lists were each column was separated by spaces. I also wanted some ID numbers connecting the WARC file to the WCT. This way, in future research, we could add metadata from the WCT to a result and filter out (for example) web collections such as XS4ALL-homepages. This proved somewhat difficult, but lucky for me I had help from an amazing developster that made it work!

Selecting data from WCT

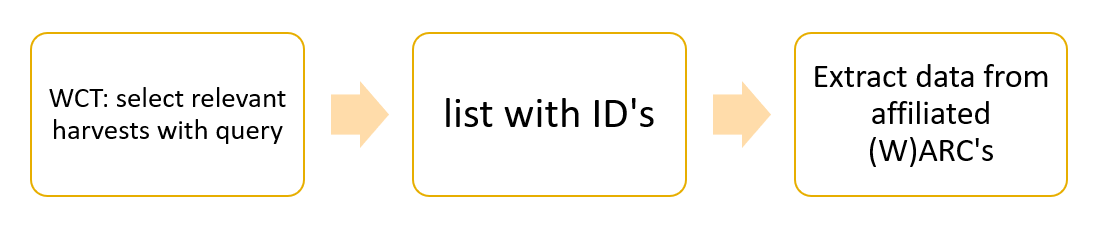

The process we used for this research was to select all harvests we wanted for our research from the WCT and then extract the actual data from the affiliated (W)ARC files. The Web Curator Tool is a tool the KB uses for managing selective web harvesting. We further use a (PostgreSQL) database that gets filled with information from WCT and which we can query. I do not elaborate on the web archiving process in detail here, but if you wish to learn more please visit the Historical growth of the KB web archive.

We can select all separate website-harvests from WCT because the KB has a selective archive. Each website we harvest gets a unique ID called a Target Record ID. Each time this Target Record gets harvested the actual harvest also gets a unique Target Instance ID. These ID’s are also added to the WARC files that are created. This is how we connect them. With a list containing Target Instance ID’s from the WCT we went to our archive and extracted the data from the files that belonged with the selected harvests.

But which ID’s did we want? At first we wanted ID’s that were connected with a collection label. This was difficult though, because this information is found in free fields (the annotation field and the subject field). Free fields are nice, but also have their challenges. Most of all: consistency. Not everyone fills out the field in the same way (though we try very hard to do!). For the limited time we had it made more sense to use an easier, more consistent metadata field. Therefor we went with harvest date. This information gets added in a very structured (mostly automatic) way which made it consistent and easier to query.

Now, there are a lot of date-metadata-fields to be found when working with a web archive. A lot. At first we used the scheduled_time field as a base for our query. The time a Target Instance was planned to be harvested. But as it turned out, the scheduled_time can change. Some Target Instances harvested earlier or later than planned. We therefor swapped to the start_time field (the time the Target Instance actually started harvesting) as a base for selecting relevant harvests (from the month September in 2010, 2013, 2016 or 2019). The code selecting the Target Instances (the where part) looked like this:

With a list of ID’s at the ready we progressed to the next stage: actually getting some hyperlinks.

Extracting data from (W)ARC files

Now I was reasonably experienced working with the WCT when I started this project. Though I did not extract the list of ID’s I did communicate heavily with my colleague Hanna Koppelaar about what I was searching for and what was possible. She then made the list and used it as base for extracting the data. As I am no programmer I did need a lot of help with the actual method of extracting the hyperlinks from the WARC (and ARC) files. So all credits for this part go to my colleague Hanna!

The base of extracting hyperlinks was finding src_tags and href_tags. We chose to get as many hyperlinks as we could from the WARC files because filtering out hyperlinks later is easier than adding them to a dataset. Here is the part of the code Hanna wrote to find these tags:

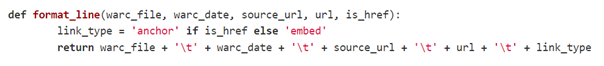

After finding the hyperlinks we ended with lists containing the following information: name of the WARC file, URL of the page where the hyperlink was found, URL that was found, type of the URL that was found (anchor or embed) and the warc_date.

(Again written by Hanna Koppelaar:)

The warc_date is not the start time from a Target Instance (the time and date a harvest starts in general), as I mentioned previously, but is the time and date when a specific resource was harvested. The time and date stamp were extracted from the WARC header from that specific resource. This caused an issue, mainly because I misunderstood and got the two confused with each other. (Again, there are lots of dates in web archives.) Only now as I am revisiting my steps I realised my mistake. But let me explain: sometimes a harvest started in September but took so long that it also harvested resources in October. When I started pre-processing (I am may getting ahead a bit, but let’s mention it here instead of the next blogpost about pre-processing) I noticed I had records with an October date. Because I thought this date was the Target Instance start date, I deleted those records. In the dataset 2016 for example I removed 20.780 rows with URLs harvested in October first and second because of this. This shows the importance of knowing your data and understanding what you get. Do not assume but always ask, check and double check!

The last information which was added to the output were the WCT ID numbers. This was added through a separate shell script. The WCT numbers were added for further research possibilities as described above; so we could now join the extracted hyperlinks with WCT metadata if we liked.

The information with which I started the next pre-processing step were four separate datasets (one for each year) filled with rows of the following information:

[name WARC] [date and time a harvest resource was harvested] [page on which the link was found] [link found] [anchor / embed] [WCT Target ID] [WCT Target Instance ID].

IAH-20160906092447-123456-webharvest01.kb.nl.arc.gz 2016-09-06T09:24:50Z http://research.kb.nl/index-ga.html http://www.geheugenvannederland.nl anchor 29524058 29460829

Learn from my mistakes….

When I started this project I had little programming skills. But I did have knowledge about the KB web collection (about its composition and WCT metadata) and an idea about what information I needed for this project. This is important I think because I had to clearly communicate with a developster about what I needed for this research. You cannot just say ‘give me everything you got’, because even our selective KB archive is vast and there are many kinds of metadata fields to choose from. Of course your research idea can change in the course of the project (ours did), but when you are working in an interdisciplinary team it is useful to be able to communicate clearly. Not only with developers but also with collection specialists. This means you need to invest some time in learning new terminology (src_tags, href_tags, anchor, embed), learn about the composition and content of an archive and delve into a WARC to learn which metadata can be found there. And even when you get your data always make sure you understand what it is you get. As I learned: there are many date- and timestamps and it’s easy to get them confused.

In the next blogpost (working title: How many hyperlinks?!) I will tell about the practical process of pre-processing this data to make it suitable for Gephi. And for those of you that paid a close eye on the code: I will also explain why the separating columns through spaces was swapped to tabs.

Related

Previous: Analysing hyperlinks in the KB web collection.

Next: How many hyperlinks?! - Link analysis part 2

Glossary

Anchor link – for our research these are hyperlinks with a href tag (<a>, <link>, <area>)

Embedded link – for our research these are hyperlinks without href tag (<img>, <script>, <embed>, <source>, <frame>, <iframe> and <track>)

HeidiSQL - lets you see and edit data and structures from computers running one of the database systems MariaDB, MySQL, Microsoft SQL, PostgreSQL and SQLite. For our research we use MariaDB.

Gephi - a visualization and exploration tool for all kinds of graphs and networks. Gephi is open-source and free.

MariaDB - a relational database made by the original developers of MySQL, open source.

Target Record (or Target) – Contains overarching settings such as schedule frequency (when and how often a website is harvested) and basic metadata like a subjectcode or a collection label.

Target Instance - is created each time a Target Record instructs Heritrix to harvest a website. Contains information about the result of the harvest and the log files.

WARC – file type in which archived websites are stored. The KB archive consists of WARC and its predecessor ARC files. This project was done for both types of files.

WCT – Web Curator Tool is a tool for managing selective web harvesting for non-technical users.

Web collection – For the KB: they have a selective web archiving policy were they harvest a limited number of sites. You can find an overview of all archived websites at the KB on their FAQ page [Dutch].