It’s familiar to many of us: stumbling upon a person or a place name somewhere on Wikipedia, getting curious about it and clicking through the links to learn more. But what if you see an interesting name while reading a digitised old book instead? Can the curiosity-driven journey be just as easy and fascinating then? We hope it can – thanks to entity linking.

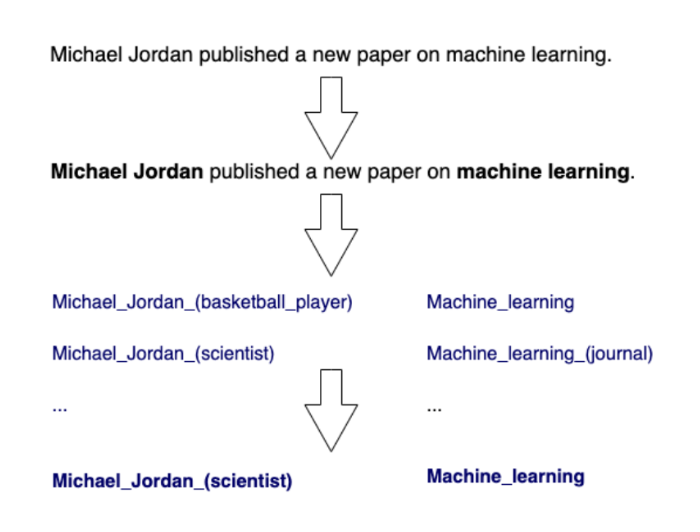

Entity linking is the task of connecting unstructured text to a structured knowledge base. It consists of three main steps: identifying named entities (names of people, places, and possibly other things such as organisations) in the text; finding candidate entries in the knowledge base for each entity; and choosing one answer from each set of candidates. These steps are referred to as named entity recognition, candidate retrieval, and entity disambiguation respectively. The knowledge base in question can be a well-known resource such as Wikipedia or Wikidata, or a domain-specific thesaurus such as the DBNL Authors thesaurus [1]. Figure 1 illustrates an example of entity linking done in three steps.

Figure 1: an example of entity linking.

The applications of entity linking are not limited to facilitating a rabbit hole journey for curious readers: it has many more serious use cases, such as making a corpus of documents easily searchable to help researchers in different domains, from medicine to art history. In the case of digital humanities and heritage, entity linking can be particularly helpful since it allows to save expensive manual efforts on metadata generation.

The task of entity linking has been on the research radar for many years, and the results on popular benchmarking datasets look like the task is almost solved, with the latest neural models achieving accuracy scores above 90%. In reality, however, things are not quite as straightforward: some domains are particularly challenging for automatic entity linking methods, and Dutch historical texts are a fine example of such a domain. We identify three main challenges:

- The language. While plenty of training data, open-source models and research papers is available for high-resource languages such as English or French, Dutch is considered to be a mid-resource language, with a limited scope of prior work to start from. When dealing with historical documents, another dimension of challenge is added: all languages evolve over time, and Dutch is no exception, with no standardised spelling available prior to the 1860s [2].

- The noise. OCR quality is a problem familiar to everyone who works with digitised documents: even when a page is in good condition, some words and characters end up being corrupted, making the text harder to understand – both for human readers and for automatic language models. This leads to errors in downstream tasks, such as entity recognition. Generally, the older a digitised document is, the more OCR noise it contains. While DBNL, the collection of books at the KB, has an excellent OCR quality thanks to extensive post-correction efforts, other collections, such as Delpher, are considerably affected by OCR mistakes.

- The shadows. Prior research has shown that entity disambiguation can be especially tricky when a wrong candidate entity in a knowledge base is more popular than the correct one: this phenomenon is known as entity overshadowing [3]. A well known example is the sentence “Michael Jordan published a paper on machine learning”: while for a human reader it is clear that the answer is Michael Jordan the scientist, most of the current entity linking models would output the link to Michael Jordan the basketball player, since this entity has occurred more often in their training data. Similar problems are expected to be present when linking entities in Dutch historical documents: some historical names may be overshadowed by their contemporary counterparts.

The project and the challenges

As a researcher whose professional interests lie at the intersection of AI and digital humanities, I find it fascinating to connect the latest technology advancements with ages-old cultural heritage. This is why I was so excited when my project proposal was accepted for the researcher-in-residence program at the KB, giving me access to the marvellous data collections and an opportunity to work in an amazing team. Since July 2023 I’ve been working in collaboration with Marieke Moolenaar and Willem Jan Faber, as well as getting some helpful advice from Michel de Gruijter and Sara Veldhoen. I have learned a lot about linked data, had a chance to dive deep into the challenges of historical Dutch texts, and submitted a research paper to one of the top international conferences on language resources (at the time of writing this post, the paper is under review and cannot be shared due to the anonymity policy of the conference, but it will be described in detail in my next blog post).

When working on a time-constrained research project, one of the main challenges is to decide which ideas are feasible to implement and which ones need to be left for future work. The original plan was to follow the latest trends in entity linking and create an end-to-end neural entity retrieval model that would connect historical texts with Wikidata pages, as well as compare this model with an existing baseline both in terms of the “classic” metrics such as precision and recall and in terms of robustness to OCR noise and entity overshadowing. The first obstacle turned out to be finding such a baseline: while plenty of open-source entity linking models exist for English, no publicly available model for Dutch could be found, leaving us with the option to implement a simple baseline from scratch. Another challenge is entity retrieval itself: training an end-to-end model is very resource-heavy, with state-of-the-art English-language models using more than 2GB of training data [4]. While DBNL does contain a vast amount of text, not many entities are linked to Wikidata: DBNL uses an internal thesaurus where only a part of the entries has a corresponding Wikidata identifier. Moreover, the entities in DBNL are not linked explicitly: instead, they are mentioned as metadata at the beginning of each chapter, so additional string matching needs to be used to prepare the datasets for entity linking. Last but not least, Wikidata itself is a source of challenge: existing entity retrieval methods use Wikipedia instead. The reason is that entity retrieval relies on text similarity: a candidate entity is chosen when its textual description is the closest to the context of the target entity (the “query”) in a vector space learned by the model. While Wikipedia pages provide a rich source of textual context for the candidate entities, the entity descriptions on Wikidata are much shorter and might not be sufficient for learning text similarity. On the other hand, using Wikipedia instead of Wikidata would be a suboptimal choice for our task, since Wikidata is more commonly used in digital humanities thanks to its considerably larger size and better coverage of historical entities.

The progress and the next steps

With the data-related challenges in mind, we decided to start with a “classic” step-by-step approach to entity linking and then explore the possibilities for end-to-end entity retrieval if time allows. The first step involves named entity recognition: the task of automatically detecting people, places and other entities mentioned in text. To do so, I used the manually annotated historical dataset from the Dutch Language Institute [5] to train three different neural models, measured their performance on both historical and contemporary data, and analysed the effect of time. This sub-project has resulted in a research paper which is currently under review and will be described in my next post, as well as an open-source software contribution that can be used in future projects at the KB.

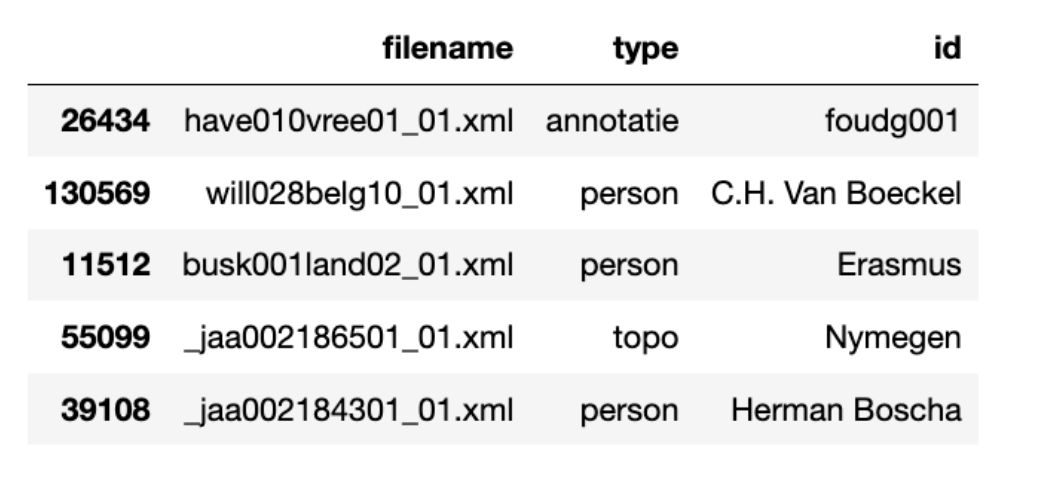

To prepare for the second step of entity linking, we explored the public domain part of DBNL[6]. The entity-related data is structured as follows: the beginning of every chapter contains primary annotations (“this text is written by author N”) as well as secondary annotations (“this text is written about person X or place Y”), both linked to an internal thesaurus. The names of N, X or Y may or may not be present in the text itself. Some chapters are explicitly annotated with the “name” tags, which mark an entity inside the text but do not contain any information about the entity identifier in the thesaurus. Figure 2 shows an example of the annotations.

Figure 2: a random sample of annotations in the public domain part of DBNL. Here, “person” and “topo” are examples of name tags inside the text, referring to a person or a place respectively, and “annotatie” is an example of a secondary annotation.

The identifiers in the internal thesaurus of DBNL consist of a prefix and a number, where the prefix is taken from the last name of a person or the name of a place, and the number is used for disambiguation: for example “amste001” refers to Amsterdam. This allows us to extract the linked entities from the chapters by matching a name tag with the corresponding secondary annotation. Out of 774163 annotations available in the public domain part of DBNL (name tags and secondary annotations combined), we extracted 36475 entities using fuzzy matching between name tags and secondary annotations.

To implement a candidate retrieval method limited to the internal thesaurus of DBNL, one could use a simple rule-based approach: look for all entity identifiers starting with the first five letters of a given entity. In our project, however, an entity linking method needs to generalise to other collections. For instance, we want to be able to test it on the KB entity linking dataset which was created in 2016 from Delpher texts and annotated with Wikidata entities [7] . Thus, the next steps in our project include finding connections between the DBNL thesaurus and Wikidata, using these connections to annotate the data in the publicly available part of DBNL, and releasing the resulting dataset to stimulate further research in entity linking on Dutch historical documents.

Notes

- DBNL. Alle auteurs. In: DBNL [Internet]. [cited 30 Nov 2023]. Available: https://www.dbnl.org/auteurs/

- Donaldson BC. Dutch:A Linguistic History of Holland and Belgium. M. Nijhoff; 1983.

- Provatorova V, Vakulenko S, Bhargav S, Kanoulas E. Robustness Evaluation of Entity Disambiguation Using Prior Probes:the Case of Entity Overshadowing. arXiv [cs.CL]. 2021. Available: http://arxiv.org/abs/2108.10949

- De Cao N, Izacard G, Riedel S, Petroni F. Autoregressive entity retrieval. arXiv preprint arXiv:201000904. 2020.

- AI-Trainingset - Tag de Tekst voor Named Entity Recognition (NER). In: INT Taalmaterialen [Internet]. Instituut voor de Nederlandse Taal; 18 May 2022 [cited 30 Nov 2023]. Available: https://taalmaterialen.ivdnt.org/download/aitrainingset1-0/

- DBNL. Collectie publiek domein. In: DBNL [Internet]. [cited 30 Nov 2023]. Available: https://www.dbnl.org/letterkunde/pd/index.php

- van Veen T, Lonij J, Faber WJ. Linking Named Entities in Dutch Historical Newspapers. Metadata and Semantics Research. Springer International Publishing; 2016. pp. 205–210.